Jan 30, 2026

Why Your Business Needs a Private AI Chatbot Now

Discover how a private AI chatbot can secure your data, slash costs, and boost productivity. Learn from our founder's journey to build a secure AI solution.

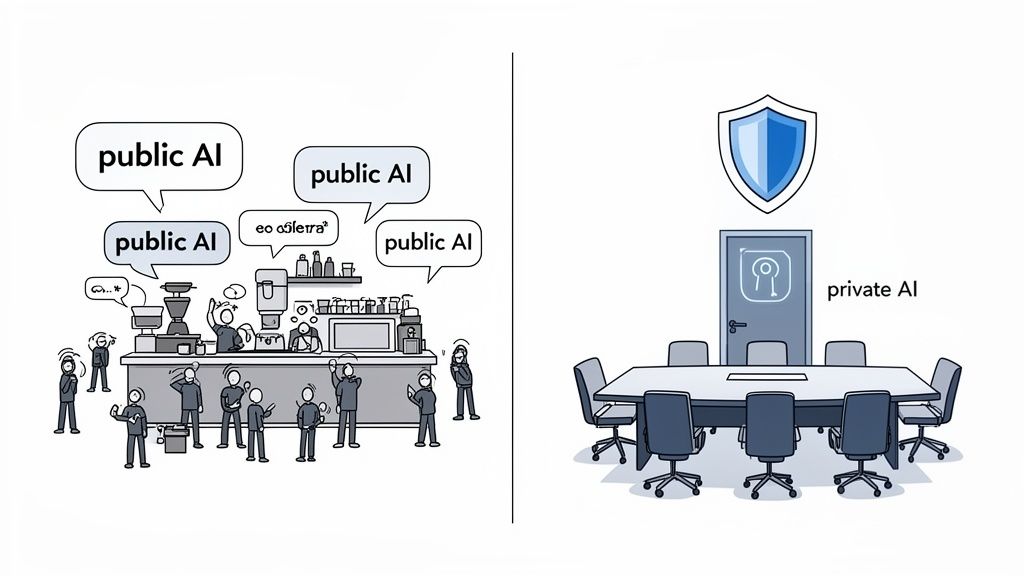

Think of a private AI chatbot as the digital equivalent of a secure, confidential boardroom. I've learned that it's an environment built exclusively for your company, where your sensitive data, trade secrets, and internal conversations stay completely within your control. Nothing gets out, and nothing is used to train public models.

It’s the stark difference between that private boardroom discussion and shouting your ideas across a crowded public café.

Our Wake-Up Call with Public AI Tools

When public AI tools like ChatGPT and Claude first hit the scene, we at Thareja Technologies dove in headfirst, just like everyone else. We were using them for everything—spinning up code snippets, drafting marketing emails, you name it. For a little while, it felt like we’d discovered a secret weapon.

But it didn't take long for the downsides to appear. Our monthly subscription bills started to balloon as more people on the team began relying on different AI platforms. Worse than the cost, though, was the hit to our productivity. Our developers found themselves constantly copying and pasting code between various AI chats, losing precious context and burning time they could have spent creating. That constant context-switching was easily costing us 5-10 hours per developer every single week.

The Moment Everything Changed

The real alarm bell wasn't about the money or lost time, though. It came in a single, heart-stopping moment. An engineer, pushing late to hit a tight deadline, accidentally pasted a sensitive piece of a client's source code into a public AI tool to help with debugging. The weight of what he'd done hit him just seconds after he hit 'enter'.

We scrambled. The next 48 hours were a blur of damage control, frantic calls with our legal team, and a knot in our stomachs hoping that a client's critical intellectual property hadn't just been absorbed into a massive public model we had zero control over. That incident was the wake-up call we needed. We were playing with fire, and we'd just been badly burned. It was a tangible business risk that could have cost us a major client and shattered the reputation we’d worked so hard to build.

That near-miss forced us to confront a hard truth: by relying on public AI, we had given up control over our most valuable asset—our data. It was no longer a theoretical risk; it was a clear and present danger to our business (Zuboff, 2019).

Turning a Pain Point into a Purpose

That single experience became the spark that led us to build our own private AI chatbot. We knew we couldn't be the only ones. With the global generative AI chatbot market projected to hit USD 113.35 billion by 2034, countless businesses are grappling with this same dilemma (Fortune Business Insights, 2024). You can dig deeper into market projections on the generative AI chatbot industry.

We needed an environment where our team could tap into the incredible power of AI without ever compromising on security. This wasn't just about creating another piece of software; it was about building a secure workspace where our team could innovate safely. For any business that's serious about protecting its IP and growing responsibly, controlling your AI environment is an absolute necessity, not an optional luxury (O'Neil, 2016).

This journey from risk to resolution is exactly why we're so passionate about the power of a private AI chatbot. It’s about laying a foundation of trust so you and your team can innovate without fear. After all, true progress always requires a secure platform to build upon (Sinek, 2009).

What Makes a Private AI Chatbot Truly Private?

Let’s cut through the noise. The term "private AI chatbot" gets thrown around a lot, but what does it actually mean for your business? It’s not about a special feature or a clever marketing slogan.

It boils down to one fundamental principle: data sovereignty.

This means you—and only you—have complete control over your data. You decide where it lives, who can access it, and how it’s used. I often use an analogy with my team: using a public AI is like discussing your most sensitive business strategy in a crowded coffee shop. Sure, you might get work done, but you have no idea who is listening in.

A private AI, on the other hand, is like having that same conversation inside your own secure, soundproof boardroom. The doors are locked. Access is controlled. The conversations that happen inside, stay inside. That feeling of security is what a truly private AI delivers.

The Core Pillars of AI Privacy

When we were building Thareja AI, we obsessed over what "private" truly meant in practice. It came down to three non-negotiable pillars that protect a business from financial loss, reputational damage, and the theft of your intellectual property.

Data Control and Isolation: Your data should never be co-mingled with anyone else's. A private AI ensures your information is stored in a dedicated, isolated environment, which is crucial for preventing cross-contamination and unauthorized access.

Zero Training on Your Data: This is the most critical promise of all. A private AI provider must guarantee that your proprietary information—your source code, client lists, financial projections—will never be used to train their public or commercial models. Your competitive advantage should never become their training data.

Robust Access and Encryption: Real privacy requires layers of security. This includes end-to-end encryption for data both in transit and at rest, along with strict, role-based access controls. This ensures only authorized team members can view or interact with sensitive information.

“Privacy is not an option, and it shouldn’t be the price we accept for just getting on the internet.” - Gary Kovacs, former CEO of Mozilla Corporation.

This principle is the bedrock of private AI. You should never have to trade your security for functionality. Protecting your data isn't just about avoiding leaks; it’s about maintaining the trust your clients and team place in you. The potential cost of a single breach often far outweighs the perceived convenience of less secure tools.

A Practical Checklist for Evaluating Providers

When you're looking at a private AI chatbot, don't just take their marketing claims at face value. You need to look for concrete proof of their commitment to your data's security. To see what this looks like at scale, just look at major enterprises like Wells Fargo's AI: 245M Interactions, Zero PII Leaks.

Here is a checklist of what we consider essential:

SOC 2 Compliance: Does the provider have a SOC 2 report? This independent audit verifies that they securely manage your data to protect the interests and privacy of their clients.

Transparent Data Policies: Can you easily find and understand their data processing agreement? It should explicitly state that your data will not be used for model training. You can see our own commitment in our Data Processing Agreement as a clear example.

Deployment Options: Do they offer deployment in a private cloud or on-premise? This gives you direct control over the physical or virtual location of your data, a key factor for regulatory compliance like GDPR or HIPAA.

Encryption Standards: Are they using industry-standard encryption protocols like AES-256? Anything less is a major red flag.

This isn’t a theoretical exercise. These are the practical questions that separate a genuinely private solution from one that simply uses "private" as a buzzword.

On-Premise vs. Private Cloud: Where Should Your AI Live?

Once we decided a private AI was the right move, we immediately hit a very practical, very important fork in the road: Where is this thing actually going to live? This isn’t just a technical footnote; it’s a decision that will shape your budget, your timeline, and how much control you truly have. We faced this exact choice when building Thareja AI, and it really boiled down to two main paths: on-premise and private cloud.

I explain it like this: an on-premise deployment is like building your own personal fortress from the ground up. You buy the land (your servers), build the walls (network security), and hire your own guards (a dedicated IT team). Every piece of hardware, every byte of data, lives within your physical domain.

This gives you the absolute pinnacle of control over your data and infrastructure. For organizations in heavily regulated fields like finance or healthcare, this level of physical security can be a powerful, and sometimes non-negotiable, advantage. But that control comes with a pretty hefty price tag.

The Hard Truth About On-Premise AI

When we started to pencil out what an on-premise setup would actually cost, the numbers went way beyond just buying a few powerful servers. We had to factor in specialized, power-hungry GPUs, intricate cooling systems, physical security for the server room, and—this is a big one—the highly skilled, and highly paid, people needed to manage it all.

The initial capital investment was significant, but it was the ongoing operational costs that really made us think twice. We projected a 20-25% annual jump in our IT budget just for maintenance, electricity, and salaries. That’s a serious, long-term financial commitment.

On top of the cost, the time investment was a huge blocker. Just getting the hardware, setting it up, and configuring all the software could have easily pushed our launch back by more than six months. In the world of AI, that’s an eternity.

The Private Cloud: Your Own Secure Vault-for-Lease

This reality led us straight to the private cloud model. I like to think of it as leasing a dedicated, high-security vault inside a world-class facility like Fort Knox. The vault is 100% yours and completely isolated from everyone else's, but the facility owners handle the guards, the building maintenance, and the power grid.

You get the exact same level of data isolation and privacy as you would on-premise, but without the massive headache of managing physical hardware. It’s a dedicated, single-tenant environment, which means your resources are never shared with anyone else—a critical piece of the puzzle for maintaining data sovereignty.

When we modeled this path for Thareja AI, the benefits were obvious almost immediately.

Our calculations showed that a private cloud deployment would save us an estimated 40% in upfront capital expenditure. Even more crucially, it slashed our projected time-to-market by at least six months compared to building and staffing our own server farm.

This wasn't just about saving money. It was about speed and focus. It allowed us to pour our energy and resources into what we do best—building an incredible AI platform—instead of getting bogged down in the business of running a data center. You can dive deeper into how different AI platforms are structured to see what might fit your own needs.

Making the Right Choice for Your Business

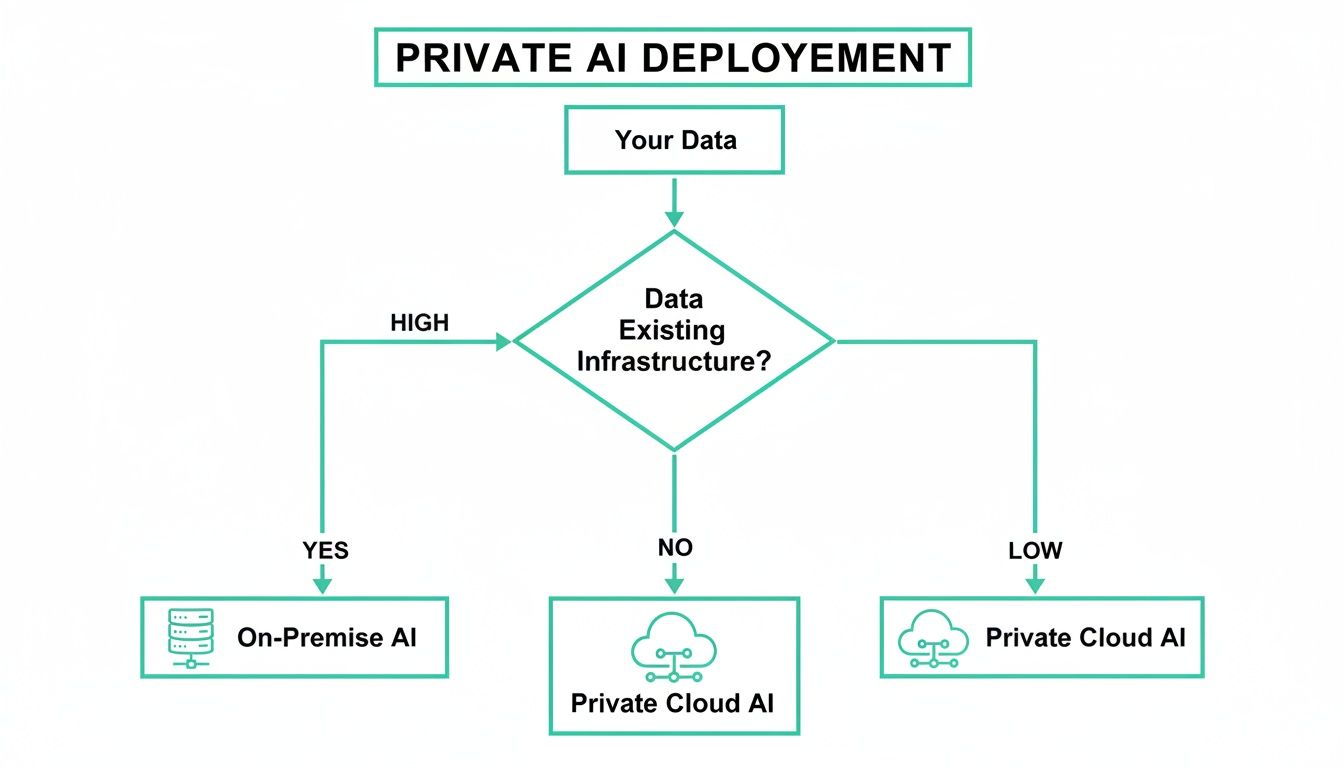

So, how do you decide? The choice between on-premise and private cloud isn't about which one is "better" in a vacuum, but which is the right fit for your specific situation.

Choose On-Premise if: You operate under extreme regulatory constraints that demand physical data residency, you already have a skilled IT infrastructure team in place, and you have the capital to make a major upfront investment.

Choose Private Cloud if: You need ironclad security and data isolation but want to avoid a massive capital outlay, get to market faster, and keep your internal teams focused on your core business, not on managing infrastructure.

For us, the private cloud was the clear winner. It gave us the uncompromising security we needed without forcing us to sacrifice the speed and financial agility that are vital for a growing company. It was the perfect balance of control and practicality.

The Tangible Business Impact: Money, Time, and Risk

Look, adopting a private AI chatbot isn't some abstract tech upgrade. It's a hard-nosed business decision with a return you can see and measure. Whenever I talk with other founders, the conversation inevitably lands on the real-world impact. We always break it down into three buckets: money, time, and risk.

These aren’t just hollow buzzwords. They're the core metrics that tell you if a new piece of tech is actually moving the needle for your business.

How We Slashed Our AI Subscription Costs by 80 Percent

The first and most immediate hit to our P&L was a positive one. Before we built our own platform, our teams were using a chaotic patchwork of specialized AI tools. We had separate subscriptions for writing, another for coding, a third for image generation… the costs were just spiraling.

By bringing access to over 50 different models under one roof with a single private AI chatbot, we were able to consolidate those expenses overnight. For our team of 10, this was a massive change.

We did the math and found we were shelling out over $2,500 per month on all those different AI subscriptions. Our unified platform dropped that cost to around $500. That’s a potential savings of over $2,000 every single month, or $24,000 a year—money we could plow right back into developing our product.

Giving 14 Hours Back to Every Employee, Every Week

The second major win was in time—our team's most valuable asset. The constant context-switching between different AI models was a silent productivity killer. A developer would be working on a code snippet in one tool, then have to copy and paste the entire conversation into another just to get a different perspective. It was maddeningly inefficient.

Introducing features like mid-chat model switching and unified document intelligence completely changed the game. Our internal data showed these capabilities slashed task completion time for our content and development teams by an average of 35%.

Think about that. For a full-time employee working 40 hours a week, that’s 14 hours saved. That time isn't just a number on a spreadsheet; it's the freedom to tackle bigger challenges, innovate on new features, and deliver more value to our clients instead of getting bogged down in repetitive, soul-crushing tasks.

This flowchart shows that critical decision point—how to deploy a private AI based on where your data already lives.

This decision directly impacts the cost, time, and risk tied to your AI strategy, making it a pivotal first step.

Sidestepping a Six-Figure Data Breach

The third, and arguably most critical, impact is risk mitigation. This is where the value becomes almost immeasurable. Last year, we worked with a client in the financial sector who was in the final stages of a highly sensitive merger. They were juggling hundreds of confidential documents, from financial statements to strategic roadmaps.

Their legal team was using a public AI tool to summarize these documents, completely unaware that their confidential queries were being fired off to third-party servers. Our private AI chatbot, deployed in their isolated cloud environment, kept every single bit of that data completely locked down.

We later learned that a competitor of their acquisition target had suffered a data breach traced back to a similar public AI tool. The leak cost them an estimated six figures in regulatory fines and legal fees. Our client avoided that exact same nightmare.

This isn’t some theoretical benefit; it’s about preventing catastrophic financial and reputational damage (Solove, 2008). The chatbot market is projected to explode to over $27 billion by 2030, and as adoption grows, so does the attack surface for these kinds of risks. If you want to go deeper on these trends, you can explore more insights on AI chatbot statistics.

A private AI turns security from a cost center into a genuine competitive advantage (Schneier, 2015). It gives you the peace of mind to innovate without constantly looking over your shoulder.

Building Your AI's Brain with Secure Company Data

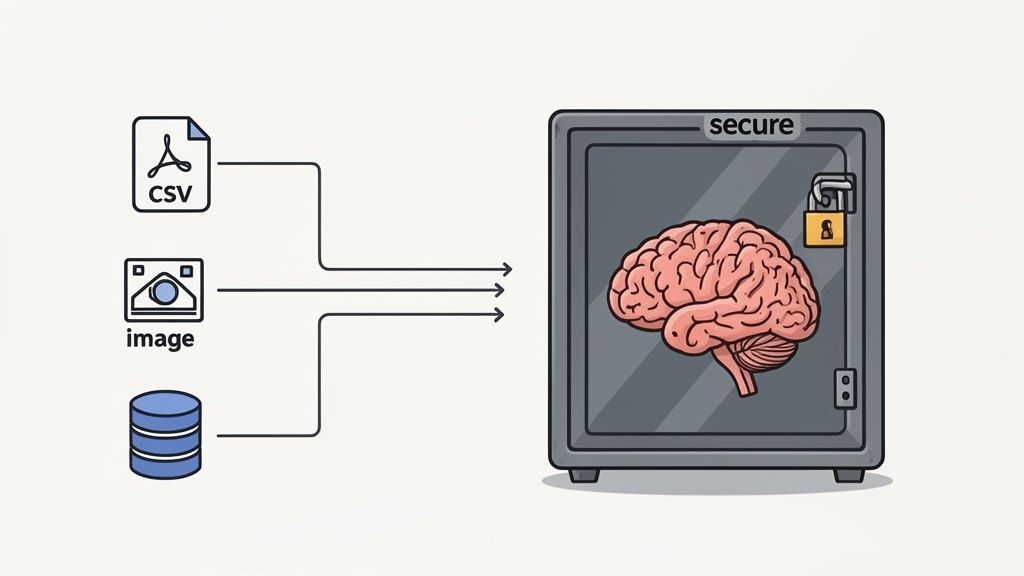

A private AI chatbot is a powerful engine, but it needs the right fuel to run. For your business, that fuel is your unique, proprietary data—the very knowledge that gives you a competitive edge. The real magic begins when you securely connect this internal knowledge, transforming a generic tool into an expert extension of your team.

This is about much more than just uploading a few files. It’s about creating a living "brain" for your AI that understands your business from the inside out. The best part? That sensitive information never has to leave your secure environment, giving you complete peace of mind.

From Static Files to Dynamic Insights

For most businesses, the journey starts by connecting static documents. This is the foundational layer of your AI’s knowledge.

We begin by integrating the file types your teams use every day, turning them into intelligent agents your chatbot can talk to. Suddenly, your people can ask complex questions and get back synthesized, actionable answers in seconds.

PDFs and Text Documents: Think of all your research reports, legal contracts, and internal process manuals. Instead of searching them one by one, your team can ask, "What are the key liability clauses in our Q3 client agreements?"

CSVs and Spreadsheets: Financial models, project timelines, and raw sales data become instantly interactive. A project manager could ask, "Show me all tasks from this spreadsheet that are behind schedule and assigned to the design team."

This ability to converse with your documents is a game-changer. It frees up an incredible amount of time previously lost to manual digging. It's the difference between owning a library and having a personal librarian who's read every single book.

Connecting to Your Live Business Systems

Documents are a fantastic starting point, but the true power is unlocked when you connect your chatbot to the live systems that run your business. This is where your private AI graduates from a knowledgeable assistant to an active, operational partner. We make this happen through secure API connections.

Of course, protecting your corporate data during this process is non-negotiable. That's why adopting robust secrets management best practices is essential to ensure credentials and data streams remain locked down.

Let me give you a real-world example. We recently worked with a digital marketing agency that was drowning in manual reporting. The team was spending hours each week pulling data from Google Analytics and HubSpot, then painstakingly compiling it into client-facing reports. It was slow, tedious, and frustratingly prone to human error.

We helped them connect their private AI directly to those platforms via secure APIs. The result? They now have a chatbot that can generate a comprehensive weekly performance report for any client in about 30 seconds.

The business impact was immediate. They saved an average of 5 hours per client each month. For an agency with 20 clients, that's 100 hours of high-value employee time reclaimed every single month—time they now pour back into strategy and strengthening client relationships. You can explore how different models handle this kind of data analysis in our guide to comparing AI models.

This is what it means to build your AI's brain. It's about securely weaving together all your separate data sources—from static PDFs to live CRMs—into a single, intelligent, and conversational interface.

Your Phased Implementation: The Crawl, Walk, Run Approach

Jumping headfirst into an enterprise-wide AI rollout is a recipe for chaos. When I talk with business leaders, their biggest fear isn't about the technology itself—it's about overwhelming their teams and disrupting the workflows that keep the business running. It's a completely valid concern. All the initial excitement can evaporate fast if people feel like a new tool is just another thing being forced on them.

This is exactly why we never recommend a "big bang" launch. Instead, we lean on a clear, actionable mental model that I call the "Crawl, Walk, Run" approach. It’s a phased roadmap designed to build momentum, prove real value, and get everyone on board by minimizing disruption and maximizing adoption.

This method transforms what feels like a daunting project into a manageable and genuinely exciting journey.

Crawl: Start Small, Win Big

The "Crawl" phase is all about focus. You start with a small, handpicked pilot team and a single, high-impact use case. The goal here isn't to revolutionize the entire company overnight. It's to find one specific, nagging pain point and solve it brilliantly. This creates a powerful internal case study that speaks for itself.

We recently helped a fast-growing startup that was completely tangled up in a messy web of public AI tools. Their customer support team was spending about 30% of their day answering the same handful of basic questions over and over again. This was the perfect "Crawl" target.

Action: We deployed a private AI chatbot exclusively for their 5-person support team.

Data Source: We securely connected it to their existing library of FAQ documents and product manuals.

Result: Within two weeks, the bot was handling over 60% of tier-1 support queries automatically. The team got nearly a third of their day back to focus on the complex customer issues where they could truly make a difference.

This small, focused win created a huge buzz. Other departments saw the results and, instead of us pushing the tech on them, they started coming to us asking when it would be their turn.

Walk: Expand and Connect

Once you've got that initial success story lighting the way, you’re ready for the "Walk" phase. This is where you expand the rollout to more departments and begin integrating more complex data sources. You’ve already proven the value; now it’s time to scale the impact.

For that same startup, the sales team was the next logical step. They saw the time savings in the support department and wanted in on the action.

We connected their private AI to the company's CRM. Suddenly, the sales team could generate personalized follow-up emails, summarize call notes, and pull client histories just by asking the chatbot a simple question.

This single integration saved each sales rep an average of 4-5 hours per week on tedious administrative tasks. The "Walk" phase is all about connecting the dots between your different business systems, turning your AI from a single-task tool into a true multi-departmental asset.

Run: Full Enterprise Integration

The "Run" phase is the ultimate goal: achieving a seamless integration where your private AI chatbot is deeply embedded into your core business workflows. It’s no longer just a tool people log into; it’s an intelligent layer that assists them inside the applications they already use every single day.

By this stage, our startup client was all in. We helped them embed the AI directly into their internal project management system. Now, developers can get code suggestions, marketers can generate campaign ideas, and leadership can ask for project status updates—all from within one unified environment.

The AI world is shifting quickly, and a fragmented approach is becoming a real liability. As ChatGPT's early dominance wanes and competitors like Google Gemini rise, the market is fracturing. A recent analysis shows ChatGPT's market share fell from 87.2% to just 68% in a year, while Gemini surged to 18.2%. This reality underscores the power of a unified platform like Thareja AI, which centralizes access to over 50 top models and insulates your business from all that market volatility. You can explore the full analysis on AI market dynamics here.

This phased approach took the startup's operations from chaotic and risky to streamlined and secure in just three manageable steps.

Your Questions About Private AI Answered

When I talk with founders and teams about building their own private AI chatbot, the same practical questions always pop up. It's one thing to get the concept, but it's another thing entirely to picture how it actually fits into your day-to-day operations. Let's dig into some of the most common questions I hear.

How Long Does It Realistically Take To Set Up?

This is always the first question, and the answer is refreshingly simple: much faster than you think.

With a modern private cloud solution, we’ve seen businesses go from a kickoff meeting to a fully functional pilot in less than a week. For one of our clients, a small e-commerce business, we had their private AI chatbot connected to their entire product catalog and all their support documents in just three business days.

The key is that you're not getting bogged down building physical infrastructure from scratch. All the heavy lifting—like server setup, security hardening, and model integration—is already handled. Your focus is on the fun part: connecting your data and defining the specific problems you want the AI to solve. That's where the real value is.

Is It Only for Highly Technical Teams?

Absolutely not. This was a core principle for us when we designed Thareja AI. While the technology running under the hood is incredibly complex, the user experience is designed to be the complete opposite.

If your team can use tools like Slack or Google Drive, they have all the skills they need to use a private AI chatbot. The process of uploading documents or connecting a data source through an API is meant to feel intuitive, not intimidating.

I've always believed that the power of AI should be in the hands of everyone in an organization, not just the engineering department. In my experience, the most creative and impactful applications come from the non-technical teams who are closest to the customer or the business problem they're trying to solve (Brynjolfsson & McAfee, 2014).

What Is the Most Common Mistake to Avoid?

The biggest pitfall I see, time and again, is trying to boil the ocean on day one. Leaders get excited by the possibilities and immediately want to connect every single database, document, and system they have. This approach almost always leads to a stalled project and a lot of frustration.

The "Crawl, Walk, Run" method isn't just a catchy phrase; it's a critical strategy for success. Start with one, clearly defined problem. For instance, focus on automating the summarization of daily sales reports. Nail it, show the $500 per month in time saved, and then expand from there. Success breeds momentum (Duhigg, 2012).

This focused approach turns what feels like a massive project into a series of achievable, satisfying wins. It’s how you build confidence across the company, get genuine buy-in from your team, and ensure the tool is adopted with excitement instead of resistance. To build that initial trust, security and privacy must be the foundation, not an afterthought (Solove, 2008). A private environment gives you the safe sandbox you need for this kind of experimentation and growth (Schneier, 2015).

Final Takeaway: Think of a private AI chatbot not as a product, but as a vault. The strength of the lock (encryption), the thickness of the walls (data isolation), and the clarity of the access log (compliance) are what determine whether your assets are truly safe inside. Ready to build your own secure, intelligent workspace without all the complexity? Explore how Thareja Technologies Inc. unifies the world's best AI models in a single, private environment. Get started at thareja.ai.